Today 26th Feb ’24, marks the unveiling of Mistral Large, the latest and most advanced language model from Mistral AI, meticulously designed with a strategic infusion of perplexity and burstiness.

Mistral Large: A Marvel of Cutting-Edge Text Generation

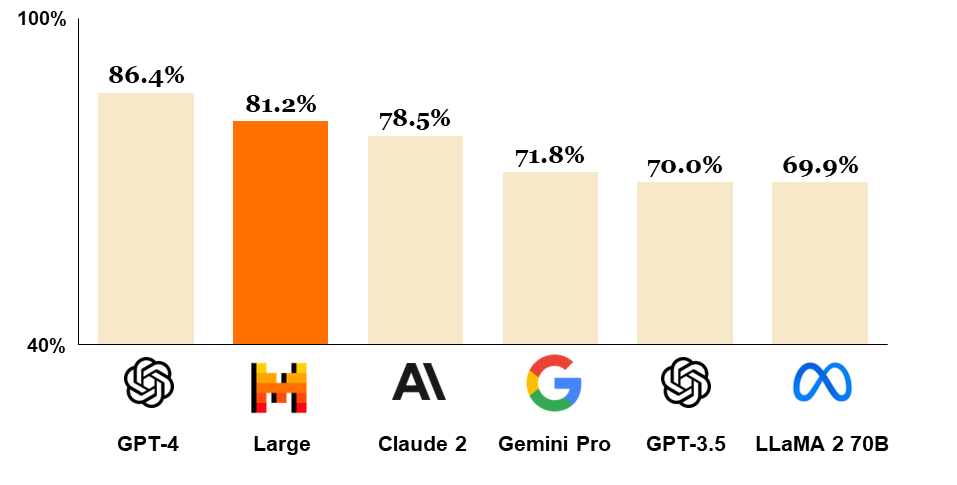

Positioned as Mistral’s new flagship model, Mistral Large showcases top-tier reasoning capabilities, positioning itself as a powerhouse for intricate multilingual reasoning tasks such as text understanding, transformation, and code generation. Notably, it has earned the distinction of being the world’s second-ranked model available through an API, just trailing behind GPT-4.

Figure 1: Comparison of GPT-4, Mistral Large (pre-trained), Claude 2, Gemini Pro 1.0, GPT 3.5 and LLaMA 2 70B on MMLU (Measuring massive multitask language understanding).

Mistral AI – Enhanced Capabilities and Strengths

Mistral Large introduces an array of capabilities that set it apart in the domain of language models:

- Multilingual Fluency: Demonstrating native fluency in English, French, Spanish, German, and Italian, Mistral Large exhibits a nuanced understanding of grammar and cultural context.

- Expanded Context Window: With a 32K tokens context window, Mistral Large excels at precise information recall from extensive documents, amplifying its comprehension capabilities.

- Instruction-Following Precision: Developers can harness Mistral Large’s precise instruction-following feature to formulate tailored moderation policies, exemplified by its role in system-level moderation for le Chat.

- Function Calling: Innately equipped for function calling, Mistral Large, coupled with the constrained output mode on la Plateforme, facilitates the development of applications and modernization of tech stacks at scale.

Partnership with Microsoft for Azure Distribution

Mistral’s mission revolves around democratizing frontier AI. In a significant leap towards this objective, Mistral is thrilled to announce a partnership with Microsoft, bringing Mistral models to Azure. This collaboration marks a pivotal step forward in the journey to make advanced AI models accessible to a broader audience. Mistral Large is seamlessly available through Azure AI Studio and Azure Machine Learning, providing users with a user-friendly experience comparable to our APIs.

Three Access Points to Mistral Models

- La Plateforme: Securely hosted on Mistral’s infrastructure in Europe, developers can access a comprehensive range of models for creating applications and services.

- Azure: Mistral Large is available on Azure AI Studio and Azure Machine Learning, delivering a seamless user experience, as validated by successful beta customers.

- Self-Deployment: For the most sensitive use cases, Mistral models can be deployed in your environment, offering access to model weights. Explore success stories in this deployment mode and contact the team for further details.

Benchmarking Mistral Large’s Capacities

Diving into Mistral Large’s performance across various benchmarks:

- Reasoning and Knowledge: Mistral Large demonstrates robust reasoning capabilities, surpassing other pretrained models on standard benchmarks.

- Multilingual Capacities: Excelling in French, German, Spanish, and Italian benchmarks, Mistral Large outperforms LLaMA 2 70B on HellaSwag, Arc Challenge, and MMLU benchmarks.

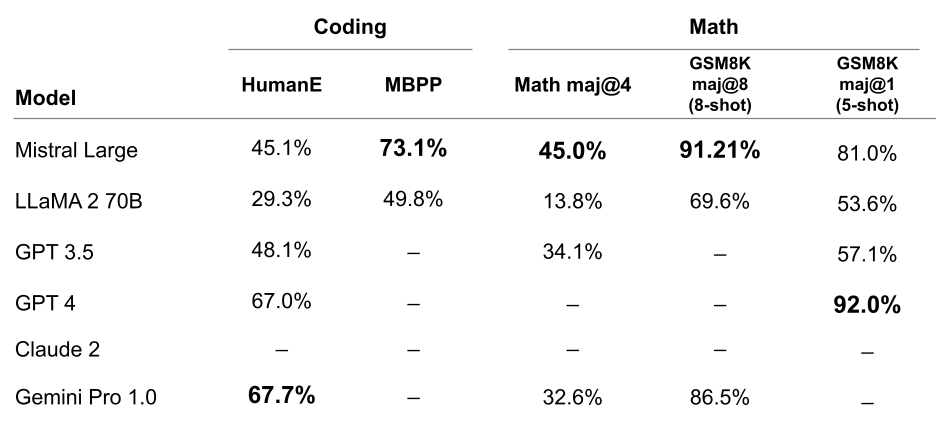

- Maths & Coding: Exhibiting top-notch performance in coding and math tasks, Mistral Large stands out in a suite of popular benchmarks.

Performance on popular coding and math benchmarks of the leading LLM models on the market: HumanEval pass@1, MBPP pass@1, Math maj@4, GSM8K maj@8 (8-shot) and GSM8K maj@1 (5 shot).

Introducing Mistral Small: Optimized for Low Latency Workloads

In tandem with Mistral Large, Mistral introduces Mistral Small—an optimized model tailored for low latency and cost-effectiveness. Outperforming Mixtral 8x7B with lower latency, Mistral Small serves as an intermediary solution between the open-weight offering and the flagship model.

Streamlined Endpoint Offering

To simplify endpoint offerings, Mistral presents two categories:

- Open-Weight Endpoints: Featuring competitive pricing, including open-mistral-7B and open-mixtral-8x7b.

- New Optimized Model Endpoints: Showcasing mistral-small-2402 and mistral-large-2402. Mistral-medium is maintained without updates.

Comprehensive View of Performance/Cost Tradeoffs

Benchmarks offer a comprehensive view of performance/cost tradeoffs, empowering users to make informed choices based on specific requirements.

Enhancements Beyond Models

Beyond new model offerings, Mistral is committed to improving user experience:

- Multi-Currency Pricing: Organizations can now manage multi-currency pricing on la Plateforme.

- Reduced Latency: Significant strides have been made in reducing the latency of all endpoints, enhancing overall system efficiency.

Unlocking New Possibilities with JSON Format and Function Calling

Mistral Large and Mistral Small introduce innovative features opening new possibilities for developers:

- JSON Format Mode: Forces language model output to be valid JSON, facilitating natural interaction and structured information extraction for seamless integration into pipelines.

- Function Calling: Developers can interface Mistral endpoints with their tools, enabling complex interactions with internal code, APIs, or databases. Refer to our function calling guide for more insights.

Try Mistral Large and Mistral Small Today

Mistral Large is now available on La Plateforme and Azure, with exposure on the beta assistant demonstrator, le Chat. The team eagerly awaits feedback as they continue to push the boundaries of language models, making AI more accessible to all.